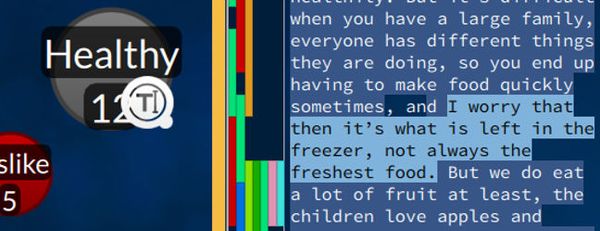

At the moment, (touch wood!) everything is in place for a launch next week, which is a really exciting place to be after many years of effort. From that day, anyone can download Quirkos, try it free for a month, and then buy a licence if it helps them in their work